AI Assisted Fraud: Approaching the Scammer Singularity

Fraud is a practice that has constantly evolved with the times. New forms of communication

inevitably become new mediums for fraudsters to scam people out of money and valuables.

Like an arms race, as new methods of fraud are developed, so too are new methods of

detection and prevention.

Artificial Intelligence (AI) represents a new paradigm for fraud. While technology enables new

ways to commit and avoid fraud, it ultimately comes down to influencing a person to do

something not in their best interest, and the ultimate weapon for fraudsters remains social

engineering.

Social engineering is the psychological manipulation of people into performing actions or

divulging confidential information, and it is a crucial technique for successful scams. You might

picture a suave con man convincing someone to invest in a phony business opportunity—at its

core, this is social engineering.

Scams often trade off between being convincing and reaching many people. Methods like email

phishing, where fraudsters attempt to collect sensitive information by sending emails that

appear to be from trusted sources, usually work by casting a wide net. By sending phishing

emails in bulk to large numbers of people, fraudsters increase the chance of encountering

someone susceptible to the scam. Some phishing attacks even use emails that aren’t

convincing to most people, ensuring that those who fall for it are more easily manipulated.

Anyone who takes the bait is then put in contact with a real person, who uses social engineering

to acquire what they need from the target.

This is where AI becomes alarming. AI chatbots are now so sophisticated that many people

cannot tell the difference between them and a real person. By leveraging AI, a fraudster no

longer needs to make a trade-off. They can send thousands of convincing phishing emails and

put anyone they trick in contact with an AI chatbot trained to extract money or data. The

advantage is that with AI, fraudsters can cast a wider net and catch more victims while requiring

less human action. This is especially worrisome since some fraudsters target the elderly, who

are more likely to fall victim to scams and have less exposure to AI, making them unlikely to

spot subtle signs of chatbots.

Regardless of how they attract and screen victims, these scams all rely on impersonation. In

impersonation scams, a fraudster assumes the identity of someone with authority, like a bank

representative or IT technician, and exploits the victim’s trust in that position. Typically,

impersonation scams are limited to impersonating authority figures, as it’s difficult to mimic

someone the victim knows personally. However, AI expands the scope of the scam. Recent

advancements now allow AI to clone someone’s voice using just a few minutes of audio and

generate images of them in fabricated scenarios, making it possible to convincingly impersonate

someone the victim actually knows and trusts.

Impersonating a victim’s family member used to be limited to text messages. Fraudsters would

pose as a relative in a time-sensitive situation—maybe a frozen bank account or trouble at the

border while on vacation—but the key factor is an urgent need for money. Scammers often

create a false sense of urgency; when caught up in the story and not thinking critically, victims

are less likely to catch on. With AI, scammers can back up their story with falsified proof, making

it far more convincing. If the scammer pretends to be in an accident on vacation and needs money for hospital bills, they can generate images of their false persona and clone the relative’s

voice to create a message. A family member acting hastily in such a situation can hardly be

blamed, and once the money is sent, there is very little chance of recovering it.

In this digital age, people share so much of their lives and personality on the internet. Mimicking

someone’s face and voice is one thing but combined with the ability of a scammer to study their

mark and build a convincing story, it creates a nearly foolproof deception that can exploit even

the most cautious individuals. The worst part is that many people will be completely convinced

once they think they see the face of their loved one and hear their voice. In those moments,

their natural instincts to protect or help those they care about override any skepticism. The

emotional connection makes it incredibly difficult to question the authenticity of what they’re

experiencing. Scammers exploit this trust, knowing that once the victim feels emotionally

invested, they are more likely to comply with urgent requests—whether it’s sending money,

sharing sensitive information, or even granting access to private accounts. This emotional

manipulation, powered by AI-driven impersonation, leaves victims vulnerable in ways that were

previously unimaginable.

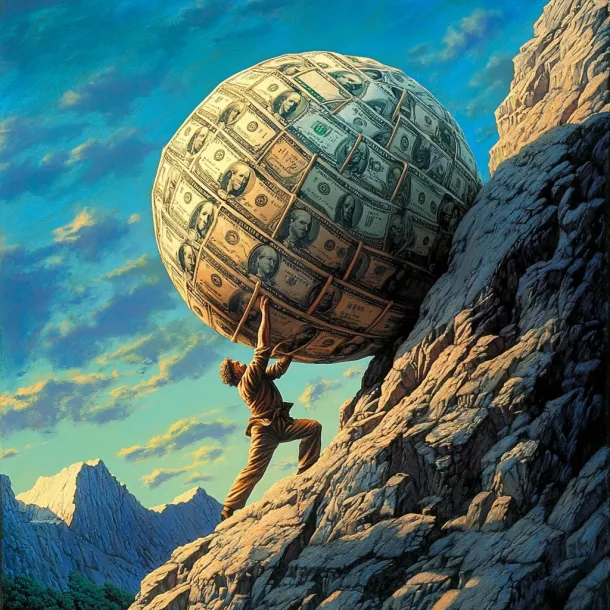

These AI tools represent a massive advancement in the effectiveness of scams. While AI could

technically be leveraged to detect and warn a potential victim of a scam, especially AI-driven

scams, it is inherently more challenging to prevent fraud than it is to perpetrate it. AI systems

can analyze vast amounts of data, identify suspicious patterns, and flag potentially fraudulent

activities with greater accuracy and speed than human intervention. However, fraudsters can

also use AI to enhance their tactics, and they often have the advantage of innovation and

unpredictability. Scammers can constantly adjust their methods, making it easier to bypass

defences, while fraud prevention systems must be reactive, identifying and adapting to new

threats as they arise. Worse yet, the complexity of AI-generated scams, which often involve

deepfake voices, images, and personalized social engineering, makes detection increasingly

difficult. In this constant game of cat-and-mouse, the perpetrator only needs to find one

vulnerability, while defenders must cover every possible angle, making fraud prevention an

inherently more difficult and resource-intensive task.

If you have been the victim of a scam and would like to discuss your legal options, please

Contact our office today to set up a consultation

Latest News

Do you want to know more,

or need a consultation?

with our firm.